Why does gradient descent take the negative gradient?

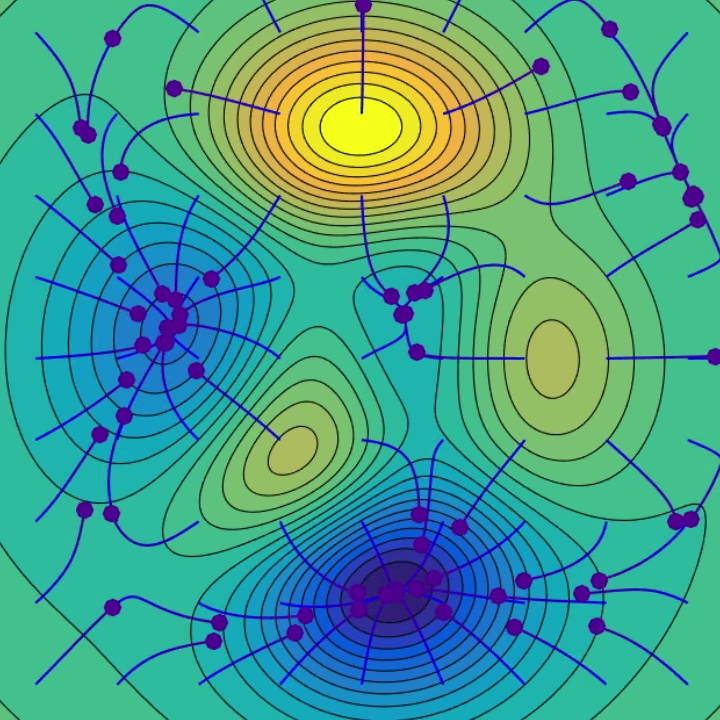

Imagine standing halfway up a mountain. It's cold, windy, and our only goal is simple: get to the lowest point in the valley.

But here's the problem, we are blindfolded. We can't see the landscape, the valleys, or even our own feet. The only information we're given is "The ground slopes this way."

So what do we do?

We trust the slope. We step in the opposite direction of the slope. That, in essence, is the gradient descent.

The Formula Behind the Intution

In machine learning, gradient descent is the algorithm we use to tweat a model's parameters like weights in a neural network. Gradient descent is an optimization algorithm used to minimize a cost (or loss) function. Its core update rule is:

$$w_{\text{new}} = w_{\text{old}} - \eta \cdot \nabla J(w)$$

Where:

- \(w\) is the weight (or parameter),

- \(\eta\) is the learning rate (a small positive constant),

- \(\nabla J(w)\) is the gradient of the cost function with respect to \(w\).

What If the Gradient is Positive?

Suppose we're at a point where the slope is +ve. \(\nabla J(w)\) is \(+0.6\), learning rate \(\eta=0.1\) . Then the update rule becomes: $$w_{\text{new}} = w_{\text{old}} - 0.1 \cdot 0.6 = w_{\text{old}} - 0.06$$ Here we are decreaing the weight. Since the function is increasing here, we move in the opposite direction to go downhill(i.e decreasing the weight).

What If the Gradient is Negative?

Suppose we're at a point where the slope is -ve. \(\nabla J(w)\) is \(-0.4\), learning rate \(\eta=0.1\) . Then the update rule becomes: $$w_{\text{new}} = w_{\text{old}} - 0.1 \cdot (-0.4) = w_{\text{old}} + 0.04$$ Here we are increasing the weight. Since the function is decreasing (slope is -ve) here, we move in the opposite direction to go downhill (i.e increasing the weight).